On Demand

Web Design Theory

Best Practices and Web Design Theory shows learners how to ensure their site is extraordinary. Each student, beginner or experienced, should take Best Practices and Web Design Theory, as it is intended to fill in space in education that typically take designers years to understand without anyone else if they ever do.

On Demand

Outlook 2016 Advanced

The outlook is the most widely used corporate email and scheduling software in the entire world. It can manage your schedule, assist to maintain your tasks on track, and keep your contacts.

Virtual Classroom

Certified Ethical Hacker (CEHv12)

What happens when the security of your information systems is vulnerable and being compromised? The best way to deal with on-going security threats in IT is to learn to hack. That’s right! This Certified Ethical Hacker cyber security training program is designed to help candidates learn better ways to deal with security breaches and to ensure a secure environment for the organization.

Virtual Classroom

PowerPivot, Power View and SharePoint 2013 Business Intelligence Center for Analysts (MS-55049)

Want to be a part of extensive SharePoint training? This course is made for you! This program is helpful in adding to your skills and knowledge of Excel 2013 PowerView and PowerPivot.

Virtual Classroom

ITIL® Service Operation (ITIL®-SO)

This professional-led ITIL® service operation program is designed to help students learn and test their knowledge regarding the ITIL®.

Virtual Classroom

ITIL® 4 Foundations Certification

The ITIL® 4 Foundations Certification course is a comprehensive introduction to the IT Infrastructure Library (ITIL®) framework, a globally recognized set of best practices for IT service management. This course equips participants with a fundamental understanding of the key concepts, principles, and processes of ITIL® 4, enabling them to align IT services with business objectives and deliver value to their organizations.

Virtual Classroom

SharePoint 2013 Search Inside Out (MS-55037)

In search of a learning course that teaches you to design different types of search topologies? Our IT Ops training program is the one to choose!

Virtual Classroom

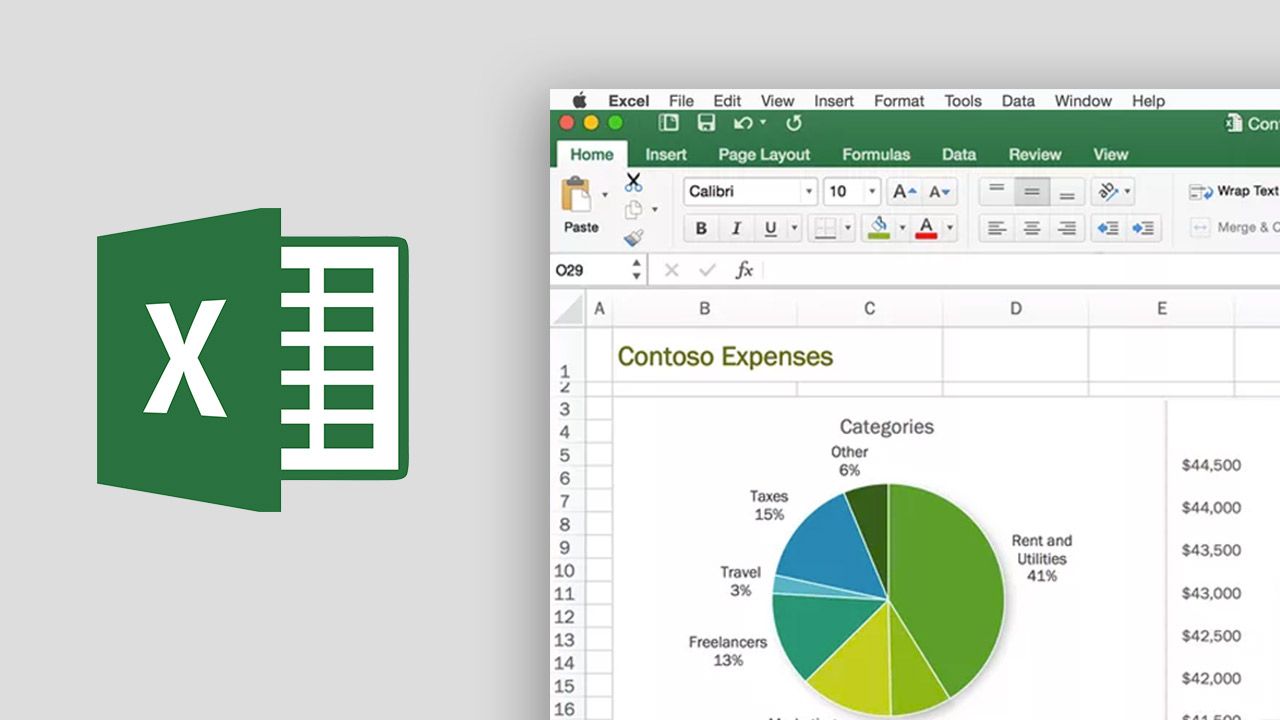

Microsoft Excel 2013 Advanced Level 3 (MS-Excel13-3)

This program builds upon the basic and advanced concepts provided in the 2013 MS Office Excel courses: P1 and P2 to enable you to get the most from your Excel knowledge.

On Demand

Javascript

This 9-hour Javascript course is designed to take the students from the fundamentals of JavaScript programming to advanced syntax, DOM, Event Handling and OOP. This course is critical for someone trying to get into web development and wanting to gain advanced knowledge about JavaScript.

Virtual Classroom

CompTIA Server+ (Exam SK0-005)

Server+ Certification legalizes the skills to build, sustain, support and troubleshoot server hardware and software. The Comptia Server exam covers server skills from 6 important IT domains: System Hardware, Software, Storing, IT Environment, Disaster Retrieval, and Troubleshooting. This top-notch certification course comprises on current professional experience with individual computer hardware backing to present the next row of skills &ideas students will utilize on the job when managing any kind of network server.

Virtual Classroom

CompTIA IT Fundamentals+ (Exam FC0-U61) (CompTIA-ITF)

Upon successful achievement of this IT Ops Training course regarding the CompTia IT fundamentals, you will be capable to carefully set up a fundamental workstation, together with installing basic hardware and software and establishing fundamental network connectivity; categorize and correct compatibility issues; recognize and avert fundamental safety risks; and carry out fundamental support techniques on computing devices.

On Demand

Social Media Strategist

Social media strategists are specialists at overseeing web networks. They see how to distinguish target clients who may be keen on a specific service or product and detail ways for organizations to arrive at those clients.